Cluster: Difference between revisions

No edit summary |

|||

| Line 99: | Line 99: | ||

The cluster is decomposed in queues | The cluster is decomposed in queues | ||

{| width="1064" cellspacing="1" cellpadding=" | {| width="1064" cellspacing="1" cellpadding="5" border="1" align="center" | ||

|- | |- | ||

! scope="col" | <br> | ! scope="col" | <br> | ||

| Line 106: | Line 106: | ||

! scope="col" | standard | ! scope="col" | standard | ||

! scope="col" | highmem | ! scope="col" | highmem | ||

! scope="col" | express | |||

! scope="col" | gpu | ! scope="col" | gpu | ||

|- | |- | ||

! scope="row" | Description | ! scope="row" | Description | ||

| nowrap="nowrap" align="center" | default queue, all available nodes<br> | | nowrap="nowrap" align="center" | default queue, all available nodes (except GPUs)<br> | ||

| nowrap="nowrap" align="center" | <br> | | nowrap="nowrap" align="center" | 2 Gb RAM<br> | ||

| nowrap="nowrap" align="center" | <br> | | nowrap="nowrap" align="center" | 3 Gb RAM<br> | ||

| nowrap="nowrap" align="center" | <br> | | nowrap="nowrap" align="center" | 4 Gb RAM<br> | ||

| nowrap="nowrap" align="center" | Limited walltime<br> | |||

| nowrap="nowrap" align="center" | GPU's dedicated queue<br> | | nowrap="nowrap" align="center" | GPU's dedicated queue<br> | ||

|- | |- | ||

! scope="row" | CPU's | ! scope="row" | CPU's (Jobs) | ||

| nowrap="nowrap" align="center" | 494<br> | | nowrap="nowrap" align="center" | 494<br> | ||

| nowrap="nowrap" align="center" | 88<br> | | nowrap="nowrap" align="center" | 88<br> | ||

| nowrap="nowrap" align="center" | 384<br> | | nowrap="nowrap" align="center" | 384<br> | ||

| nowrap="nowrap" align="center" | 8<br> | | nowrap="nowrap" align="center" | 8<br> | ||

| nowrap="nowrap" align="center" | 24<br> | |||

| nowrap="nowrap" align="center" | 14<br> | | nowrap="nowrap" align="center" | 14<br> | ||

|- | |- | ||

! scope="row" | Walltime default/limit | ! scope="row" | Walltime default/limit | ||

| nowrap="nowrap" align="center" colspan=" | | nowrap="nowrap" align="center" colspan="4" | 144 hours (6 days) / 240 hours (10 days) | ||

| nowrap="nowrap" align="center" | 15 hours / 15 hours<br> | |||

| nowrap="nowrap" align="center" | 50 hours / 50 hours<br> | |||

|- | |- | ||

! scope="row" | Memory default/limit | ! scope="row" | Memory default/limit | ||

| nowrap="nowrap" align="center" | <br> | | nowrap="nowrap" align="center" | 2 Gb<br> | ||

| nowrap="nowrap" align="center" | 2 Gb<br> | | nowrap="nowrap" align="center" | 2 Gb<br> | ||

| nowrap="nowrap" align="center" | 3 Gb<br> | | nowrap="nowrap" align="center" | 3 Gb<br> | ||

| nowrap="nowrap" align="center" | 4 Gb<br> | | nowrap="nowrap" align="center" | 4 Gb<br> | ||

| nowrap="nowrap" align="center" | 2 Gb<br> | |||

| nowrap="nowrap" align="center" | <br> | | nowrap="nowrap" align="center" | <br> | ||

|} | |} | ||

Revision as of 08:04, 23 June 2015

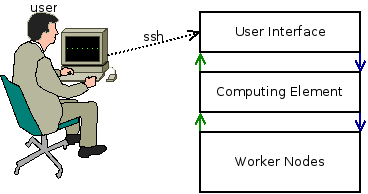

IIHE local cluster

Overview

The cluster is composed by 4 machine types :

- User Interfaces (UI)

This is the cluster front-end, to use the cluster, you need to log into those machines

Servers : ui01, ui02

- Computing Element (CE)

This server is the core of the batch system : it run submitted jobs on worker nodes

Servers : ce

- Worker Nodes (WN)

This is the power of the cluster : they run jobs and send the status back to the CE

Servers : slave*

- Storage Elements

This is the memory of the cluster : they contains data, software, ...

Servers : datang (/data, /software), lxserv (/user), x4500 (/ice3)

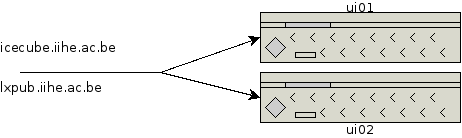

How to connect

To connect to the cluster, you must use your IIHE credentials (same as for wifi)

ssh username@icecube.iihe.ac.be

TIP : icecube.iihe.ac.be & lxpub.iihe.ac.be points automatically to available UI's (ui01, ui02, ...)

After a successful login, you'll see this message :

========================================== Welcome on the IIHE ULB-VUB cluster Cluster status http://ganglia.iihe.ac.be Documentation http://wiki.iihe.ac.be/index.php/Cluster IT Help support-iihe@ulb.ac.be ========================================== username@uiXX:~$

Your default current working directory is your home folder.

Directory Structure

Here is a description of most useful directories

/user/{username}

Your home folder

/data

Main data repository

/data/user/{username}

Users data folder

/data/ICxx

IceCube datasets

/software

The local software area

/ice3

This folder is the old software area. We strongly recommend you to build your tools in the /software directory

/cvmfs

Centralised CVMFS repository for the IceCube Software

Batch System

Queues

The cluster is decomposed in queues

| any | lowmem | standard | highmem | express | gpu | |

|---|---|---|---|---|---|---|

| Description | default queue, all available nodes (except GPUs) |

2 Gb RAM |

3 Gb RAM |

4 Gb RAM |

Limited walltime |

GPU's dedicated queue |

| CPU's (Jobs) | 494 |

88 |

384 |

8 |

24 |

14 |

| Walltime default/limit | 144 hours (6 days) / 240 hours (10 days) | 15 hours / 15 hours |

50 hours / 50 hours | |||

| Memory default/limit | 2 Gb |

2 Gb |

3 Gb |

4 Gb |

2 Gb |

|

Job submission

To submit a job, you just have to use the qsub command :

qsub myjob.sh

OPTIONS

-q queueName : choose the queue (default: any)

-N jobName : name of the job

-I : pass in interactive mode

-m : mail options

-l : resources options

Job management

To see all jobs (running / queued), you can use the qstat command or go to the JobMonArch page

qstat

OPTIONS

-u username : list only jobs submitted by username

-n : show nodes where jobs are running

-q : show the job repartition on queues

Useful links

Ganglia Monitoring : Servers status

JobMonArch : Jobs overview