Cluster

IIHE local cluster

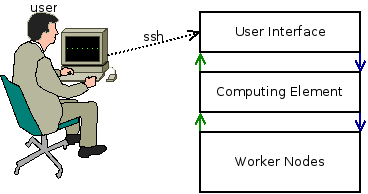

Overview

The cluster is composed by 4 machine types :

- User Interfaces (UI)

This is the cluster front-end, to use the cluster, you need to log into those machines

Servers : ui01, ui02

- Computing Element (CE)

This server is the core of the batch system : it run submitted jobs on worker nodes

Servers : ce

- Worker Nodes (WN)

This is the power of the cluster : they run jobs and send the status back to the CE

Servers : slave*

- Storage Elements

This is the memory of the cluster : they contains data, software, ...

Servers : datang (/data, /software), lxserv (/user), x4500 (/ice3)

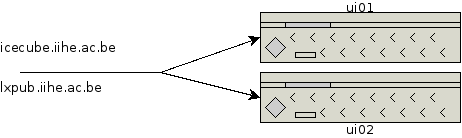

How to connect

To connect to the cluster, you must use your IIHE credentials (same as for wifi)

ssh username@icecube.iihe.ac.be

TIP : icecube.iihe.ac.be &lxpub.iihe.ac.be points automatically to available UI's (ui01, ui02, ...)

After a successful login, you'll see this message :

========================================== Welcome on the IIHE ULB-VUB cluster Cluster status http://ganglia.iihe.ac.be Documentation http://wiki.iihe.ac.be/index.php/Cluster IT Help support-iihe@ulb.ac.be ========================================== username@uiXX:~$

Your default current working directory is your home folder.

Directory Structure

Here is a description of most useful directories

/user/username

Your home folder

/data

Main data repository

/data/user

Users data folder

/data/ICxx

IceCube datasets

/software

The custom software area

/software/src

Sources of software to install

/software/icecube

Icecube specific tools

/software/icecube/ports

This folder contains the I3 ports used by icecube (meta-)projects

In order to use it, you must define the environment variable $I3_PORTS

export I3_PORTS="/software/icecube/ports"

This variable is set only for the current session and will be unset after logout, to avoid typing this each time, you can add this command to your .bashrc

List installed ports

$I3_PORTS/bin/port installed

List available ports

$I3_PORTS/bin/port list

Install a port

$I3_PORTS/bin/port install PORT_NAME

/software/icecube/offline-software

This folder contains the offline-software meta-project

To use it, just run the following command (note the point at the beginning of the line)

. /software/icecube/offline-sofware/[VERSION]/env-shell.sh

Available versions :

- V14-02-00

/software/icecube/icerec

This folder contains the icerec meta-project

To use it, just run the following command (note the point at the beginning of the line)

. /software/icecube/icerec/[VERSION]/env-shell.sh

Available versions :

- V04-05-00

- V04-05-00-jkunnen

/software/icecube/simulation

This folder contains the simulation meta-project

To use it, just run the following command (note the point at the beginning of the line)

. /software/icecube/simulation/[VERSION]/env-shell.sh

Available versions :

- V03-03-04

- V04-00-08

- V04-00-09

- V04-00-09-cuda

/ice3

This folder is the old software area. We strongly recommend you to build your tools in the /software directory

Batch System

Queues

The cluster is decomposed in queues

| any | lowmem | standard | highmem | gpu | |

|---|---|---|---|---|---|

| Description | default queue, all available nodes |

GPU's dedicated queue | |||

| CPU's | 542 |

88 |

384 |

16 |

54 |

| Walltime default/limit | 144 hours (6 days) / 240 hours (10 days) | ||||

| Memory default/limit | 2 Gb |

3 Gb |

4 Gb |

||

Job submission

To submit a job, you just have to use the qsub command :

qsub myjob.sh

OPTIONS

-q queueName : choose the queue (default: any)

-N jobName : name of the job

-I : pass in interactive mode

-m : mail options

-l : resources options

Job management

To see all jobs (running / queued), you can use the qstat command or go to the JobMonArch page

qstat

OPTIONS

-u username : list only jobs submitted by username

-n : show nodes where jobs are running

-q : show the job repartition on queues

Useful links

Ganglia Monitoring : Servers status

JobMonArch : Jobs overview